Objective:

How do LLMs work and operate. Enabling LLM’s at scale: Explore recent AI and Generative AI language models

Steps

Math on words: Turn words into coordinates. Statistics on words: Given context what is the probability of whats next. Vectors on words. Cosine similarity How train: Use Markov chain for prediction of the next Tokenisation

Tokeniser: map from token to number

- Pre-training: tokenise input using NLP techqinues

- LLM looks at context: nearby tokens, in order to predict

different implmentationg for differnet languages. Differnet tokenisers or translating after.

Journey to scale:

- Demos, POC (plan to scale): understand limitations

- Beyond experiments and before production:

- Enterprise level: translate terms so they can use governess techniques.

Building:

Software Development Life Cycle

For GenAI: Building an applicaiton with GenAi features

- Plan: use case: prompts : archtecture: cloud or on site

- Build: vector database

- Test: Quality and responsible ai.

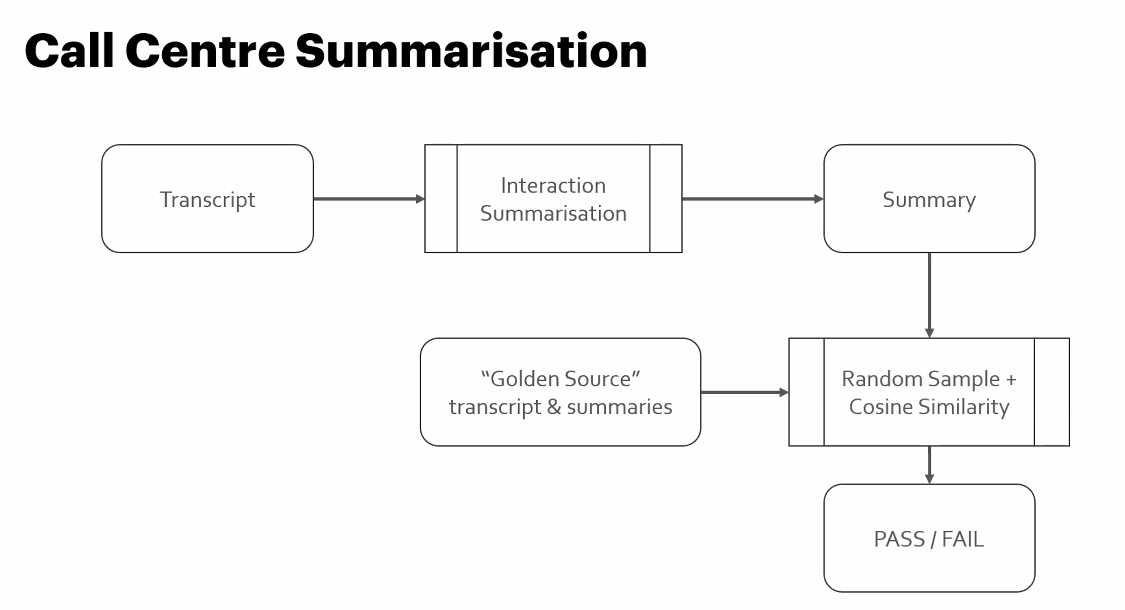

call summarisation

take transcript - > summariser → summarise

Source: human labeled transcripts to check summariser.

Ngrams analysis - when specific words realy matter

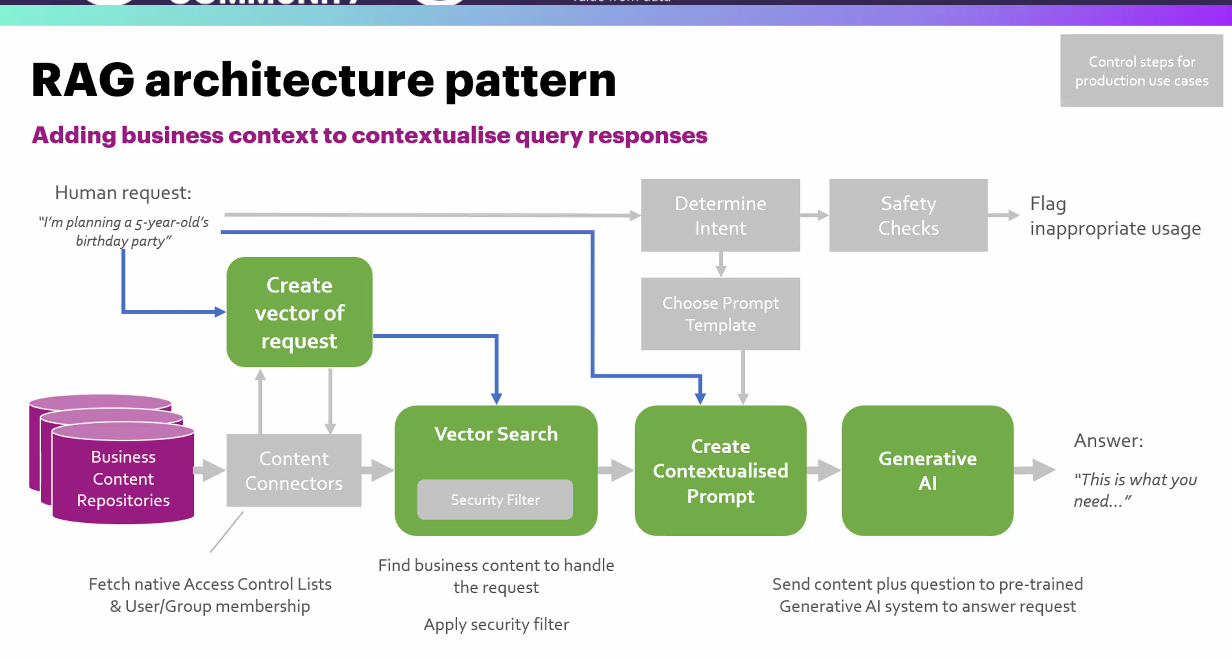

RAG

Use relvant data to make response better:

GAN

For image models.

Examples: midjourney,stable diffusion,dall-e 3

image model techniques:

- text to image

- image to image

Notes:

Use LLM’s to get short info, then cluster. Going round training data : called a Epochs