Manifold learning is a powerful approach for high-dimensional data exploration, focusing on uncovering the lower-dimensional manifold that the data resides on. These algorithms aim to identify and map the underlying low-dimensional structure, or manifold, that the data is assumed to lie on, within the high-dimensional space. This is particularly useful for reducing dimensionality while preserving the intrinsic properties of the data.

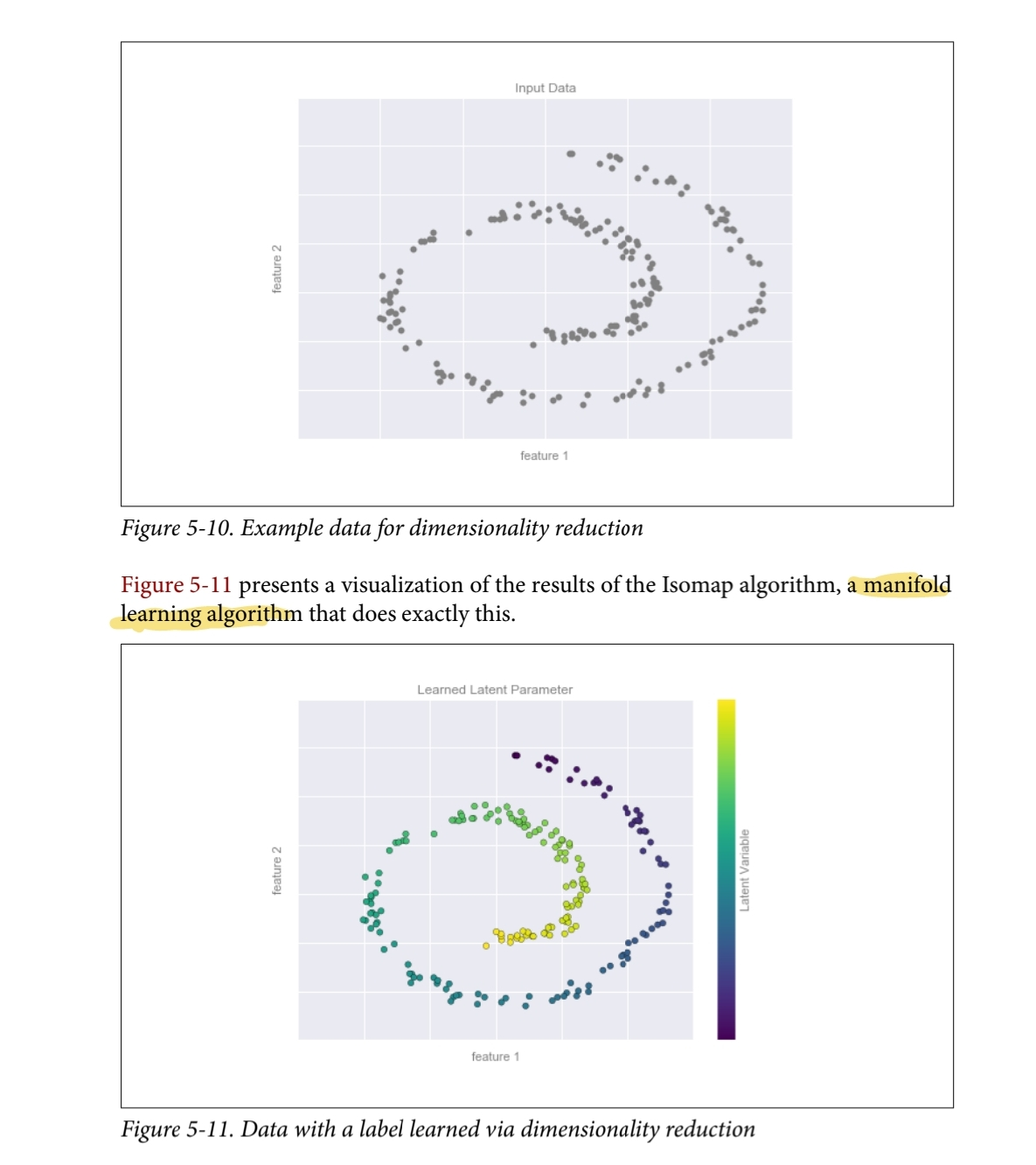

Methods like Isomap aim to preserve the geodesic distances between points, which better represent the data’s true structure than straight-line distances in high-dimensional space. This enables effective Dimensionality Reduction for non-linear data while preserving important relationships between data points.

Key Concepts of Manifold Learning:

-

High-Dimensional Data:

- In many machine learning problems, data can have a high number of dimensions (features), making it challenging to analyze directly. However, often this high-dimensional data lies on a much simpler, lower-dimensional structure, or manifold, embedded in the high-dimensional space. Manifold learning seeks to find and represent this lower-dimensional structure, simplifying the analysis and visualization of complex datasets.

-

Manifold Assumption:

- Manifold learning assumes that although the data may appear high-dimensional, the true degrees of freedom are much fewer. This means the data can be represented in a lower-dimensional space without losing important information about its structure.

-

Geodesic Distances:

- In manifold learning, the goal is often to preserve certain distances or relationships between data points. Isomap, for example, is a popular manifold learning algorithm that aims to preserve geodesic distances—the shortest paths between points along the manifold. These distances represent the true relationships between points in the underlying lower-dimensional space, even though they may seem far apart in the high-dimensional space.

-

- Like other dimensionality reduction techniques (such as PCA), manifold learning helps reduce the number of features in the data. However, manifold learning is particularly effective when the data is non-linear, meaning traditional linear techniques like PCA might not capture the true underlying structure. Algorithms like Isomap, Locally Linear Embedding (LLE), and t-SNE are examples of manifold learning methods that handle such non-linear structures.

Example: Isomap

- Isomap is a manifold learning algorithm that tries to preserve the geodesic distances between all pairs of data points. It computes these distances by constructing a graph in which the edges represent the shortest path along the manifold (not the straight-line distance in high-dimensional space). Then, it uses these distances to map the data to a lower-dimensional space, retaining the structure of the original data.