Q-learning is a value-based, model-free Reinforcement learning algorithm where the agent learns the optimal policy by updating Q-values based on the rewards received. It is particularly useful in discrete environments like grids.

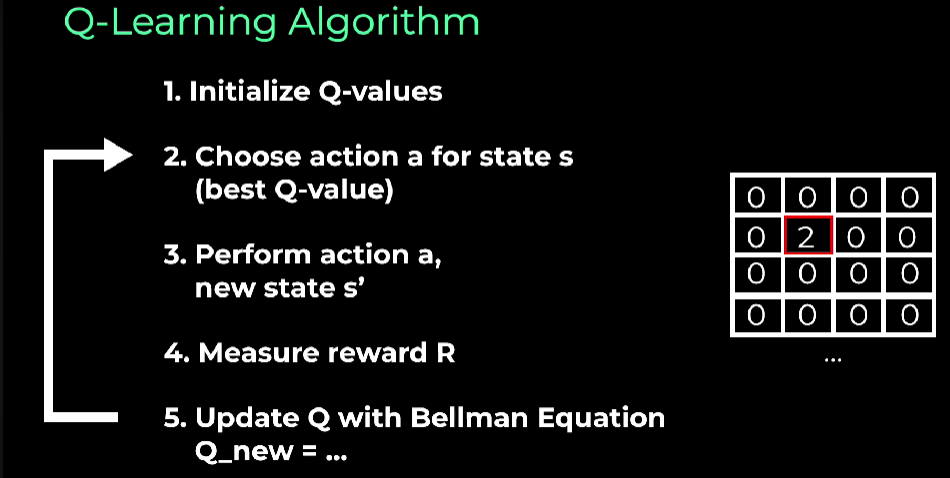

Uses a Q-Table which is populated by Q-values which are the maximum expected future reward for the given state and action. We improve the Q-Table in an iterative approach

Resources:

Q-learning update rule:

The left hand side gets updated (Bellman Equations)

Explanation:

- : The Q-value of the current state and action .

- : The learning rate, determining how much new information overrides old information.

- : The reward received after taking action from state .

- : The discount factor, balancing immediate and future rewards.

- : The maximum Q-value for the next state across all possible actions .

Notes:

- Q-learning is well-suited for environments where the state and action spaces are discrete and manageable in size.

- The algorithm is designed to converge to the optimal policy, even in non-deterministic environments, as long as each state-action pair is sufficiently explored.

- Exploration vs Exploitation